In de wereld van technologie en digitale communicatie worden de termen IT (Informatietechnologie) en ICT (Informatie- en Communicatietechnologie) vaak door elkaar gebruikt. Hoewel ze veel overeenkomsten hebben, duiden ze op licht verschillende domeinen binnen de technologische wereld. In deze blog duiken we in het verschil tussen IT en ICT, verkennen we de betekenis achter deze afkortingen, en bekijken we welke opleidingen je kunt volgen als je in een van deze spannende velden wilt werken.

Wat is IT?

De afkorting IT staat voor ‘Informatietechnologie’. Het richt zich op het beheren en verwerken van informatie met behulp van computers en software. De kern van IT ligt in het verzamelen, opslaan, beschermen, verwerken, overbrengen, en ophalen van data. IT-professionals werken vaak met databasesystemen, netwerken en softwareontwikkeling.

Wat is ICT?

De afkorting ICT staat voor ‘Informatie- en Communicatietechnologie’ en breidt het domein van IT uit door zich niet alleen te richten op informatietechnologie, maar ook op de integratie en interactie met communicatietechnologie. Dit omvat telecommunicatie (zoals internet en mobiele netwerken), broadcast media, audiovisuele verwerking en transmissie systemen en netwerkgebaseerde controle en monitoring functies. ICT is dus een breder begrip dat de rol van eenheid en communicatie in de informatietechnologie benadrukt.

Het verschil tussen IT en ICT

Het voornaamste verschil tussen IT vs ICT ligt in de ‘C’ van communicatie. ICT dekt een breder spectrum, waarbij de nadruk ligt op communicatietechnologieën naast de traditionele IT-functies. De behandelde concepten binnen de ICT zijn onderdeel van de IT-industrie, maar niet alle IT- concepten worden gedekt door de term ICT. Terwijl IT zich concentreert op informatie systemen en softwareontwikkeling, omvat ICT ook aspecten zoals mobiele communicatie en het internet. Het is belangrijk om dit onthouden bij het gebruik van de termen zowel professioneel als academisch, vooral wanneer het verwijzen naar specifieke concepten die onder een termijn kan vallen, maar niet de andere.

Industrie

Binnen de informatie-industrie worden de termen ICT en IT vaak door elkaar gebruikt . Echter, de definitie van ICT wordt steeds meer bepaald als de industrie vooruit gaat. Het is belangrijk om te weten of de term correct wordt gebruikt, als de gehele context van een artikel of tekst kan worden afgeschuind . De definitie van ICT zal blijven groeien met de industrie, zoals nieuwe vormen van communicatie worden onderzocht en ontwikkeld.

Conclusie

Of je nu de voorkeur geeft aan de directe benadering van IT, met zijn focus op informatiesystemen, of de bredere horizon van ICT, die ook communicatietechnologieën omvat, beide velden bieden fascinerende mogelijkheden voor toekomstige technologieprofessionals. Het kiezen van een carrière in IT of ICT betekent kiezen voor een toekomst vol innovatie, uitdaging en de mogelijkheid om een significante impact te maken in zowel het bedrijfsleven als in de maatschappij. Met de juiste opleiding en een passie voor technologie kun je je pad naar succes in deze dynamische en altijd evoluerende industrie vormgeven.

Op Rip 123 IT EU wordt gewerkt met o. a.

- WordPress, + security

- ‘Libreoffice’

- ‘Filezilla’

- ‘Chrome’

- ‘Firefox’

- sites gemaakt

belinda-smits nl

belinda-smits online

boukje-ruighaver nl

boukje-ruighaver online

rip-herrewijnen eu

herrewijnen-stories nl

herrewijnen-stories online

herrewijnen com

herrie-uitgeverij eu

rherip.nl

reisverslagen eu

reisverslagen website

rip-herrewijnen nl

rip-it nl

rip123it eu

rip123it nl

rip123it online

rip nl

History

Information technology (IT) is a broad professional category covering functions including building communications networks, safeguarding data and information, and troubleshooting computer problems.

“IT” redirects here. For the customer service colloquially referred to as “IT”, see Tech support. For other uses, see It (disambiguation). “Infotech” redirects here. Not to be confused with Infotech Enterprises. For the political group, see Information Technology (constituency).

Information technology (IT) is a set of related fields within information and communications technology (ICT), that encompass computer systems, software, programming languages, data and information processing, and storage.[1] Information technology is an application of computer science and computer engineering.

The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several products or services within an economy are associated with information technology, including computer hardware, software, electronics, semiconductors, internet, telecom equipment, and e-commerce.[2][a]

An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a computer system — including all hardware, software, and peripheral equipment — operated by a limited group of IT users, and an IT project usually refers to the commissioning and implementation of an IT system.[4] IT systems play a vital role in facilitating efficient data management, enhancing communication networks, and supporting organizational processes across various industries. Successful IT projects require meticulous planning and ongoing maintenance to ensure optimal functionality and alignment with organizational objectives.[5]

Although humans have been storing, retrieving, manipulating, analysing and communicating information since the earliest writing systems were developed,[6] the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that “the new technology does not yet have a single established name. We shall call it information technology (IT).”[7] Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs.[7]

[edit]

Main article: History of computing hardware

Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450 – 1840), electromechanical (1840 – 1940), and electronic (1940 to present).[6]

Ideas of computer science were first mentioned before the 1950s under the Massachusetts Institute of Technology (MIT) and Harvard University, where they had discussed and began thinking of computer circuits and numerical calculations. As time went on, the field of information technology and computer science became more complex and was able to handle the processing of more data. Scholarly articles began to be published from different organizations.[8]

During the early computing, Alan Turing, J. Presper Eckert, and John Mauchly were considered some of the major pioneers of computer technology in the mid-1900s. Giving them such credit for their developments, most of their efforts were focused on designing the first digital computer. Along with that, topics such as artificial intelligence began to be brought up as Turing was beginning to question such technology of the time period.[9]

Devices have been used to aid computation for thousands of years, probably initially in the form of a tally stick.[10] The Antikythera mechanism, dating from about the beginning of the first century BC, is generally considered the earliest known mechanical analog computer, and the earliest known geared mechanism.[11] Comparable geared devices did not emerge in Europe until the 16th century, and it was not until 1645 that the first mechanical calculator capable of performing the four basic arithmetical operations was developed.[12]

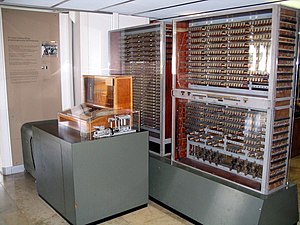

Electronic computers, using either relays or valves, began to appear in the early 1940s. The electromechanical Zuse Z3, completed in 1941, was the world’s first programmable computer, and by modern standards one of the first machines that could be considered a complete computing machine. During the Second World War, Colossus developed the first electronic digital computer to decrypt German messages. Although it was programmable, it was not general-purpose, being designed to perform only a single task. It also lacked the ability to store its program in memory; programming was carried out using plugs and switches to alter the internal wiring.[13] The first recognizably modern electronic digital stored-program computer was the Manchester Baby, which ran its first program on 21 June 1948.[14]

The development of transistors in the late 1940s at Bell Laboratories allowed a new generation of computers to be designed with greatly reduced power consumption. The first commercially available stored-program computer, the Ferranti Mark I, contained 4050 valves and had a power consumption of 25 kilowatts. By comparison, the first transistorized computer developed at the University of Manchester and operational by November 1953, consumed only 150 watts in its final version.[15]

Several other breakthroughs in semiconductor technology include the integrated circuit (IC) invented by Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor in 1959, silicon dioxide surface passivation by Carl Frosch and Lincoln Derick in 1955,[16] the first planar silicon dioxide transistors by Frosch and Derick in 1957,[17] the MOSFET demonstration by a Bell Labs team,[18][19][20][21] the planar process by Jean Hoerni in 1959,[22][23][24] and the microprocessor invented by Ted Hoff, Federico Faggin, Masatoshi Shima, and Stanley Mazor at Intel in 1971. These important inventions led to the development of the personal computer (PC) in the 1970s, and the emergence of information and communications technology (ICT).[25]

By 1984, according to the National Westminster Bank Quarterly Review, the term information technology had been redefined as “the convergence of telecommunications and computing technology (…generally known in Britain as information technology).” We then begin to see the appearance of the term in 1990 contained within documents for the International Organization for Standardization (ISO).[26]

Innovations in technology have already revolutionized the world by the twenty-first century as people have gained access different online services. This has changed the workforce drastically as thirty percent of U.S. workers were already in careers in this profession. 136.9 million people were personally connected to the Internet, which was equivalent to 51 million households.[27] Along with the Internet, new types of technology were also being introduced across the globe, which has improved efficiency and made things easier across the globe.

Along with technology revolutionizing society, millions of processes could be done in seconds. Innovations in communication were also crucial as people began to rely on the computer to communicate through telephone lines and cable. The introduction of the email was considered revolutionary as “companies in one part of the world could communicate by e-mail with suppliers and buyers in another part of the world…”.[28]

Not only personally, computers and technology have also revolutionized the marketing industry, resulting in more buyers of their products. In 2002, Americans exceeded $28 billion in goods just over the Internet alone while e-commerce a decade later resulted in $289 billion in sales.[28] And as computers are rapidly becoming more sophisticated by the day, they are becoming more used as people are becoming more reliant on them during the twenty-first century.

from wikipedia